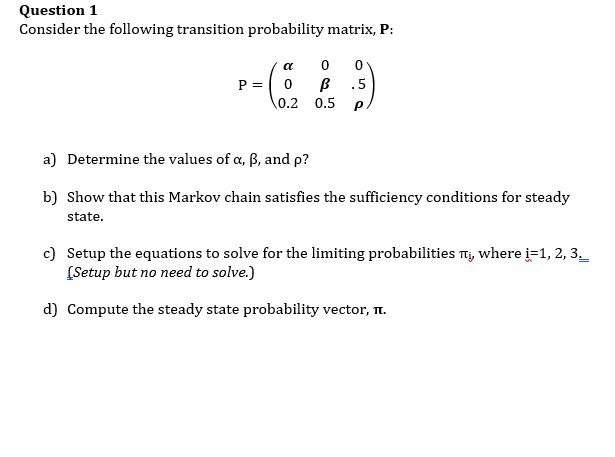

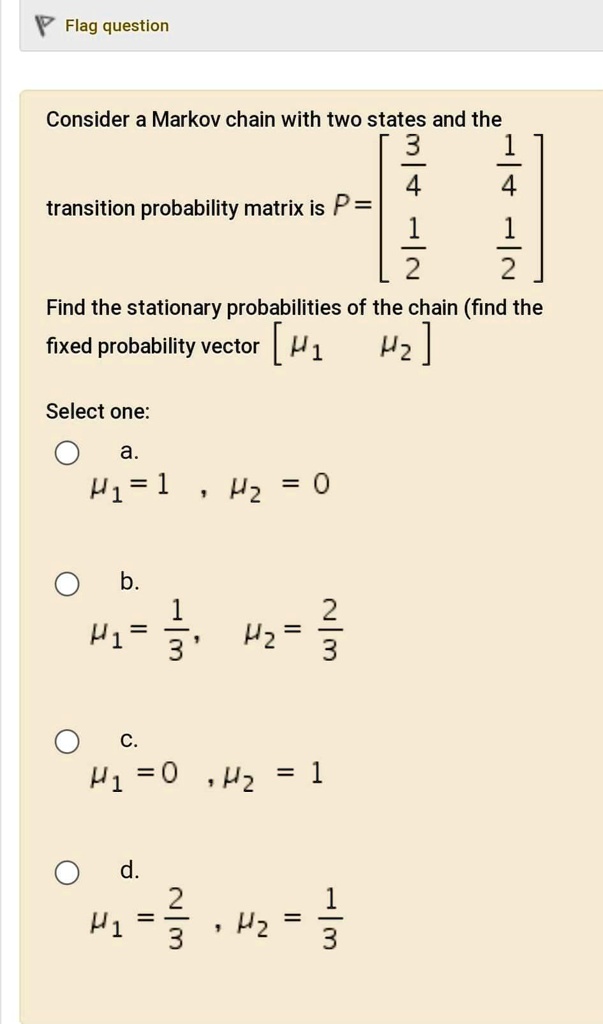

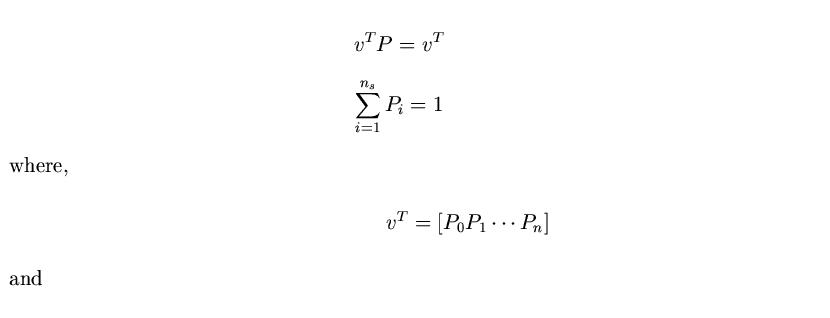

SOLVED: Flag question Consider a Markov chain with two states and the 2 4 transition probability matrix is P= 2 2 Find the stationary probabilities of the chain (find the fixed probability

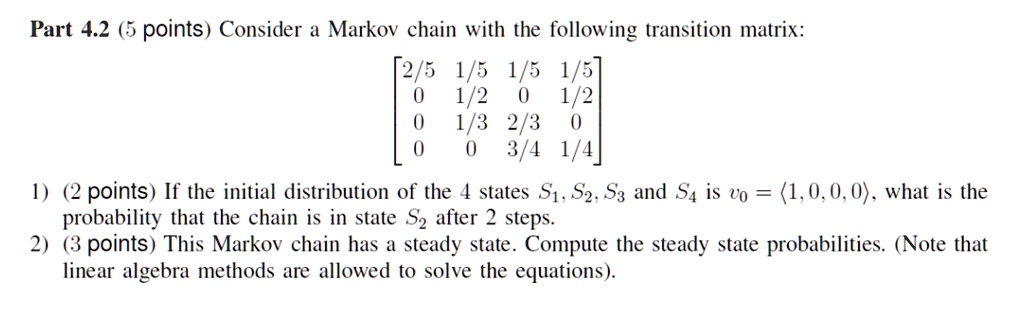

SOLVED: Part 4.2 (5 points) Consider a Markov chain with the following transition matrix: 2/5 1/5 1/5 1/51 1/2 1/2 1/3 2/3 3/4 1/47 I) (2 points If the initial distribution of

![SOLVED: Which of the following Markov chain has unique steady state? Select one: S1 Sz S3 S4 Sq Sz S; S4 Sq Sz Sz S4 S] Sz S3 S4 SOLVED: Which of the following Markov chain has unique steady state? Select one: S1 Sz S3 S4 Sq Sz S; S4 Sq Sz Sz S4 S] Sz S3 S4](https://cdn.numerade.com/ask_images/eed08fdb78e64452974ad2ae1c8f025a.jpg)

SOLVED: Which of the following Markov chain has unique steady state? Select one: S1 Sz S3 S4 Sq Sz S; S4 Sq Sz Sz S4 S] Sz S3 S4

![PDF] Approximation Algorithms for Steady-State Solutions of Markov Chains | Semantic Scholar PDF] Approximation Algorithms for Steady-State Solutions of Markov Chains | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/7a18df762438ed23dcb352a046bc268009e6e047/3-Figure2-1.png)